Towards a complete processing chain of 6D pose estimation for upper limb neuro-prostheses control

Supervisors:

Jenny Benois-Pineau (Labri, U Bordeaux), co-supervisor Renaud Péteri (MIA, U La Rochelle)

Context

The context of this internship is visual assistance for controlling bionic neuroprostheses.

The LABRI and MIA laboratories are involved in a national project, ANR I-Wrist [1,2], in partnership with INSIA laboratory at the University of Bordeaux and the Tour de Gassies Rehabilitation Centre.

Visual assistance for controlling bionic neuroprostheses is a rapidly growing field of research, both in computer vision and in robotics for assisting amputees. To control a robotic prosthetic arm, it is necessary to estimate the 6D pose of the object that the wearer wishes to grasp, i.e. its position and orientation. In our study, the prosthesis wearer is equipped with a Hololens 2 augmented reality headset.

Deep learning based methods, such as DenseFusion [4], which perform well in terms of 6D pose estimation, require accurate segmentation of the object in the field of view of the video camera attached to the amputee’s glasses. It also needs RGB and Depth images to perform the estimation of the 6D pose, along with the model of the object to grasp.

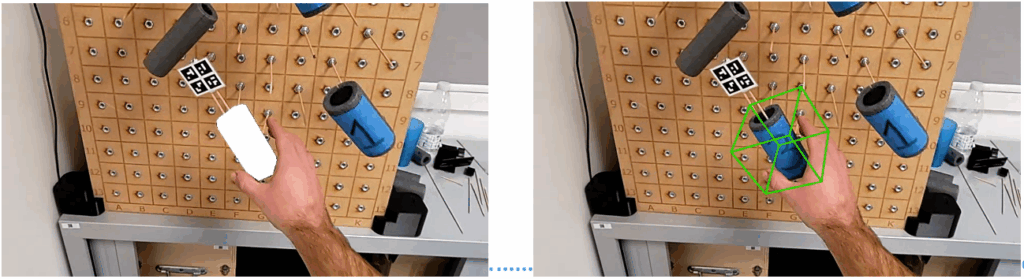

As part of the I-Wrist project, a complete segmentation pipeline with Foundational models, guided by knowledge of the subject’s fixation points measured with an eye tracker, has been developed [3]. Our method, tracks and segment the object to grasp using the fixation points in the image as indicators of its position. Moreover, Aruco markers placed during our experiments enable us to have the ground truth of the object pose (Figure 1b).

Goals of the research internship:

The objectives of this Master’s internship will be to:

· Assess the quality of the pose estimation method by metrics based on the transformation matrices

· Integrate an end-to-end pipeline from the gaze fixation points to the pose estimation of the object to grasp

· Perform a critical state of the art assessment on recent methods that estimate an object pose without depth sensors or model (for instance [5-7])

· Finally, depending on the time remaining, implement a program to include augmented reality results in Hololens 2.

Duration and funding

· The internship will last 6 months, from February 1st to July 25 2026.

· The internship will be remunerated for the entire period.

Prerequisites

· Have good skills in Python programming, PyTorch framework

· Have completed the Computer Vision module and AI

· Be able to read and write documents in English

. Be curious, enthusiastic in research and keen on AI development for helping people!

https://www.labri.fr/projet/AIV/projet.php

Bibliography

[1]. 1. S. Mick, E. Segas, L. Dure, C. Halgand, J. Benois-Pineau, G. E. Loeb, D. Cattaert, A. de Rugy, Shoulder kinematics plus contextual target information enable control of multiple distal joints of a simulated prosthetic arm and hand. J NeuroEngineering Rehabil 18, 3 (2021).

[2]. Lento, B., Segas, E., Leconte, V. et al. 3D-ARM-Gaze: a public dataset of 3D Arm Reaching Movements with Gaze information in virtual reality. Sci Data 11, 951 (2024). https://doi.org/10.1038/s41597-024-03765-4

[3]. Ander Etxezarreta, Jenny Benois-Pineau, Renaud Péteri, Lucas Bardisbanian, Aymar De Rugy, First-person human sensing for upper limb neuroprosthesis control: 6D pose estimation of objects to grasp

International Conference on Content-Based Multimedia Indexing (CBMI), 2025, Dublin

[4]. C. Wang et al., « DenseFusion: 6D Object Pose Estimation by Iterative Dense Fusion », 2019, arXiv. doi: 10.48550/ARXIV.1901.04780.

[5]. Corsetti, Jaime; Boscaini, Davide; Oh, Changjae; Cavallaro, Andrea; Poiesi, Fabio.

Open-Vocabulary Object 6D Pose Estimation.

In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2024, pp. 18071-18080.

[6]. Örnek, Evin Pınar; Labbé, Yann; Tekin, Bugra; Ma, Lingni; Keskin, Cem; Forster, Christian; Hodan, Tomáš.

FoundPose: Unseen Object Pose Estimation with Foundation Features.

In: European Conference on Computer Vision (ECCV), 2024, pp. 163-182.

[7]. Deng, Weijian; Campbell, Dylan; Sun, Chunyi; Zhang, Jiahao; Kanitkar, Shubham; Shaffer, Matt E.; Gould, Stephen.

Pos3R: 6D Pose Estimation for Unseen Objects Made Easy.

In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2025, pp. 16818-16828.