Hemispherical vision for pose measurement with large ranges and high resolutions for precision robotics and indoor humanoid localization

Ph.D. supervisors: Guillaume Laurent, Antoine André, Patrick Sandoz

Ph.D. period: starting on October 1st 2025 for 36 months

Pdf of the offer can also be found at this link: femto_st_jrl_phd_offer

Context

In robotics, whether industrial, mobile, collaborative, or humanoid, precise and repeatable motion is essential for tasks such as sub-millimetric assembly, safe human-robot interaction, or reliable navigation in indoor environments. Accurate pose estimation is therefore a key challenge. A common approach to perform this pose estimation is to rely on exteroceptive sensors, such as motion capture systems or laser trackers, which offer high precision levels, but are costly, cumbersome and restricted to controlled environments.

In contrast, onboard vision systems enable greater deployment flexibility but generally suffer from lower accuracy (~10 cm error), particularly in angular measurements (~5 degrees error), due to their reliance on feature-based methods.

FEMTO-ST Institute (AS2M department) tackled this problematic for microrobotics applications, by designing periodic visual targets, affixed on studied robots [2, 3]. By performing spectral analysis on these periodic visual targets, this method achieves nanometric translational and microradian angular resolution over a 10 cm range, with applications in micro-robotics like micro-assembly and force sensing [4, 5]. However, this approach is limited to microscopy and long-focal imaging systems, which restricts its usage to narrow angular ranges.

On the other hand, at the CNRS-AIST Joint Robotics Laboratory (JRL), recent research focuses on the use of hemispherical cameras (field of view >180 deg.) for tasks such as orientation estimation and visual servoing [6, 7], applied to robotic arms and humanoids [1, 8]. Although these cameras offer a wide measurement range, they lack high resolution due to optical distortion and low angular resolution.

This thesis lies at the intersection of hemispherical vision and high-resolution pose estimation for robotics. The main goal is to overcome the limitations of both approaches by adapting spectral pose estimation to wide-angle vision systems embedded in robots. By doing so, this thesis will lead to a compact, high-precision, onboard pose measurement method suited to both precision robotics and large-scale humanoid platforms, bridging the gap between range and resolution.

Research proposal

The PhD project will be structured in three main phases.

Develop an adapted periodic target to hemispherical lens design

The first phase will focus on studying the state-of-the-art approaches in computer vision for pose estimation and the modeling of hemispherical cameras. This will include studying existing methods for spectral analysis of periodic patterns, analyzing the optical characteristics of hemispherical cameras, and modeling their distortions. A key step will be the design and fabrication of periodic targets adapted to hemispherical vision, to ensure homogeneous pose measurement over a wide angular range. This phase will also include the integration of the designed periodic target to validate its performances.

Real-time pose estimation and projection compensations

The second phase will aim to validate and study the performances of the designed periodic target in real experimental setups. After implementing real-time pose measurement, this step of the Ph.D. will aim to integrate dynamic compensation of the visual target with respect to the measured pose. This projection system for the visual targets will also be designed to guarantee precise measurement across varying lighting conditions and distances.The second phase will aim to validate and study the performances of the designed periodic target in real experimental setups. After implementing real-time pose measurement, this step of the Ph.D. will aim to integrate dynamic compensation of the visual target with respect to the measured pose. This projection system for the visual targets will also be designed to guarantee precise measurement across varying lighting conditions and distances.

Validation and application to robotics platforms

The final phase will consist of validating the complete system on precision robots at FEMTO-ST and on humanoid robots at JRL. The system will be deployed on a humanoid platform to demonstrate its effectiveness for indoor pose measurement. The evaluation will focus on the accuracy, robustness, and effective range of measures of the system in real-world conditions. A comparative analysis with existing approaches will be conducted to assess the performance gains achieved in pose and orientation estimation.

Working conditions

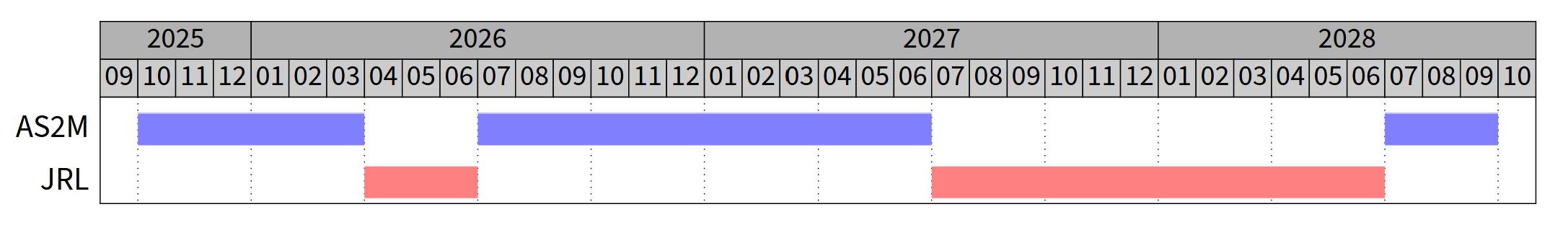

The thesis will be conducted half-time between France (AS2M department, FEMTO-ST Institute, Besançon) and Japan (CNRS-AIST JRL, Tsukuba). As presented in the chart below, the first and second years of the Ph.D. will be mostly carried at FEMTO-ST Institute with one 3-month trip to JRL to conduct experiments. The last part of the thesis will be conducted at JRL, with a 1-year stay, before going back to FEMTO-ST Institute to prepare the Ph.D. defense.

The Ph.D. student will be gratified with a gross salary of 2200 € in 2025 and, starting 2026, 2300 €.

Expected skills

Coming from either an engineering school or a university, the candidate should have an academic level of English, a strong background in robotics (direct/inverse kinematics), computer vision (image processing, pose estimation, spectral analysis), and programming (C++, OpenCV, ROS2, Python). A research experience at the Master’s level would be greatly appreciated.

Applications

Candidates should prepare a unique PDF file including their CV, a transcript of their master’s results, and a one-page summary of a personal, industrial, or academic project they have completed, including one or two figures.

Applications must be submitted via the CNRS website by midnight on 3 July:

https://emploi.cnrs.fr/Offres/Doctorant/UMR6174-GUILAU-001/Default.aspx

For further information, please contact one of us.

Guillaume Laurent: guillaume.laurent@ens2m.fr

Patrick Sandoz: patrick.sandoz@univ-fcomte.fr

Antoine André: antoine.andre@aist.go.jp

References

[1] M. Benallegue, G. Lorthioir, A. Dallard, R. Cisneros‑Limón, I. Kumagai, M. Morisawa, H. Kaminaga, M. Murooka, A. Andre, P. Gergondet et al., “Humanoid robot rhp friends: Seamless combination of autonomous and teleoperated tasks in a nursing context,” IEEE Robotics & Automation Magazine, 2025.

[2] A. N. André, P. Sandoz, B. Mauzé, M. Jacquot, and G. J. Laurent, “Sensing one nanometer over ten centimeters: A microencoded target for visual in‑plane position measurement,” IEEE/ASME Transactions on Mechatronics, vol. 25, no. 3, pp. 1193–1201, 2020.

[3] ——, “Robust phase‑based decoding for absolute (x, y, θ) positioning by vision,” IEEE Transactions on Instrumentation and Measurement, vol. 70, pp. 1–12, 2020.

[4] B. Mauzé, R. Dahmouche, G. J. Laurent, A. N. André, P. Rougeot, P. Sandoz, and C. Clévy, “Nanometer precision with a planar parallel continuum robot,” IEEE Robotics and Automation Letters, vol. 5, no. 3, pp. 3806–3813, 2020.

[5] A. N. André, O. Lehmann, J. Govilas, G. J. Laurent, H. Saadana, P. Sandoz, V. Gauthier, A. Lefevre, A. Bolopion, J. Agnus et al., “Automating robotic micro‑assembly of fluidic chips and single fiber compression tests based‑on θ visual measurement with high‑precision fiducial markers,” IEEE Transactions on Automation Science and Engineering, vol. 21, no. 1, pp. 353–366, 2022.

[6] A. N. André, F. Morbidi, and G. Caron, “Uniphorm: A new uniform spherical image representation for robotic vision,” IEEE Transactions on Robotics, 2025.

[7] N. Crombez, J. Buisson, A. N. André, and G. Caron, “Dual‑hemispherical photometric visual servoing,” IEEE Robotics and Automation Letters, 2024.

[8] K. Chappellet, M. Murooka, G. Caron, F. Kanehiro, and A. Kheddar, “Humanoid locomanipulations using combined fast dense 3d tracking and slam with wide‑angle depth images,” IEEE Transactions on Automation Science and Engineering, 2023.