Réunion

Thematic day on phase transitions in high-dimensional inference

Axes scientifiques :

- Théorie et méthodes

- Apprentissage machine

Organisateurs :

- - Bruno Loureiro (DI ENS)

Nous vous rappelons que, afin de garantir l'accès de tous les inscrits aux salles de réunion, l'inscription aux réunions est gratuite mais obligatoire.

Inscriptions

7 personnes membres du GdR IASIS, et 43 personnes non membres du GdR, sont inscrits à cette réunion.

Capacité de la salle : 70 personnes. 20 Places restantes

Inscriptions closes pour cette journée

Annonce

Phase transitions have emerged as a unifying theme across probability, statistics, statistical physics, and machine learning. In many high-dimensional inference problems—ranging from community detection in networks and sparse signal recovery to learning with neural networks—one observes sharp thresholds that separate regimes of possibility, impossibility, and computational hardness. Understanding these transitions has become central to both theory and practice: they reveal fundamental limits of what can be inferred from data, clarify the gap between information-theoretic and algorithmic feasibility, and provide new tools to analyse the behavior of algorithms.

This thematic day will bring together researchers from diverse fields to discuss recent progress, open challenges, and emerging connections around phase transitions in high-dimensional inference.

Invited speakers:

- Freya Behrens (EPFL)

- Louise Budzynski (DIENS)

- Vittorio Erba (EPFL)

- Guilhem Semerjian (LPENS)

- Ludovic Stéphan (ENSAI)

Contributed talks:

- Mohammed Racim Moussa Boudjemaa (IRIT)

- Thomas Tulinski (LPENS)

- Samantha Fournier (IPhT Saclay)

Organisers: Bruno Loureiro (DI-ENS & CNRS) and Marylou Gabrié (LPENS)

Programme

- 9:00 - 9:45: Guilhem Semerjian (LPENS)

- 9:45 - 10:30: Freya Behrens (EPFL)

- 10:30 - 11:00: Coffe Break

- 11:00 - 11:45: Ludovic Stéphan (ENSAI)

- 11:45 - 12:30: Mohammed Racim Moussa Boudjemaa (IRIT)

- 12:30 - 14:00: Lunch Break

- 14:00 - 14:45: Vittorio Erba (EPFL)

- 14:45 - 15:30: Thomas Tulinski (LPENS)

- 15:30 - 16:00: Coffe Break

- 16:00 - 16:45: Louise Budzynski (DIENS)

- 16:45 - 17:30: Samantha Fournier (IPhT Saclay)

Résumés des contributions

Guilhem Semerjian (LPENS)

Generalization in extensive-width neural networks via low-degree polynomials

Low-degree polynomials provide a versatile methodology to build systematic approximations of high-dimensional inference problems. In this talk I will present some recent results obtained by applying this framework to problems of supervised learning, focussing in particular on two layers neural networks of extensive width.

Freya Behrens (EPFL)

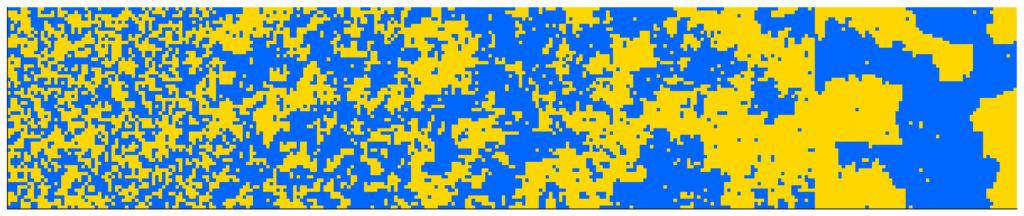

Dynamics on Graphs: Identifying Phase Transitions via Attractors

Local dynamics on graphs describe a wide range of systems, including opinion spreading, epidemics, distributed algorithms, and physical systems such as spin glasses.

The backtracking dynamical cavity method provides an analytical framework for studying these dynamics, both sparse and dense, by capturing the number of configurations that rapidly converge to a given system state. In this talk, I will describe the method and its applications to various systems. This approach allows us to identify dynamical phase transitions analytically and to answer questions such as: Under which initial bias do nodes in a graph reach consensus? Finally, I will discuss how this analytical idea can be turned into an algorithm for constructing special initializations, e.g. those that converge to consensus faster than typical random initializations.

Ludovic Stéphan (ENSAI)

Phase transitions in tensor completion: from random to pseudorandom sampling

Tensor completion, the higher-order analog of matrix completion, is characterized by a statistical-to-computational gap in the number of samples necessary to retrieve information. We show that this is only a consequence of the random sampling scheme: a small modification suffices to bridge the gap between polynomial and non-polynomial algorithms. We also prove that, akin to many inference problems, finding a non-trivial alignment with the signal is the hardest problem, while the refinement sample complexity does not depend on the tensor order.

Mohammed Racim Moussa Boudjemaa (IRIT)

Whitening Spherical Gaussian Mixtures in the Large-Dimensional Regime

Parameter estimation of Gaussian mixture models (GMMs) can be achieved via tensor-based method-of-moments (MoM) approaches that exploit the low-rank tensor structure of their higher-order tensor moments (or transformations thereof), beyond the mean and the covariance. In particular, a common strategy when dealing with spherical GMMs consists in performing a whitening step that simultaneously projects the data onto a low-dimensional signal subspace and orthonormalizes the components' means. In the classical regime where the number of available samples N is much larger than the data dimension P, whitening the data effectively facilitates GMM estimation, as the required tensor decomposition of high-order moments becomes orthogonal, and therefore much easier to carry out. However, in the large-dimensional regime (where N,P are both large and P ~ N), the empirical second-order moments become spectrally distorted, as predicted by random matrix theory (RMT). Consequently, the standard whitening step fails to orthogonalize the components' means. We show that this issue leads to a substantial performance degradation of the aforementioned tensor-based GMM estimation methods. Specifically, building on deterministic equivalents for spiked covariance models, we derive explicit expressions that quantify the residual alignment between the whitened component means in the large-dimensional limit. Our formulas show that, as expected, this quantity increases with the dimension-to-sample ratio. Then, as our main contribution, we demonstrate that a simple RMT-informed correction to the whitening matrix can asymptotically restore orthogonality of components' means post-whitening. Applying our corrected whitening completely suppresses the residual alignment asymptotically. As a consequence, this correction allows recovering the GMM parameters up to a projection onto the best possible estimate of the signal subspace that contains the means.

Vittorio Erba (EPFL)

Bilinear index models: feature learning in fully connected networks and attention layers

In the last decade, two sets of empirical observations have been put forth to try to understand and model how neural networks learn: spectral properties of the learned weights, and scaling laws for the generalization error.

Current theoretical models fail at predicting and understanding such observations in the feature learning regime, i.e. when the networks learn high-dimensional internal representation of the high-dimensional input data.

In this talk I will introduce a solvable class of models, bilinear index models, in which both spectra and scaling laws can be analytically studied in the feature learning regime.

This class of models includes one-hidden-layer extensive-width neural networks with quadratic activations and softmax attention layers as used in transformers, allowing to explore analytically spectra and scaling laws in non-trivial architectures.

Thomas Tulinski (LPENS)

Duality between direct and inverse problems in high-dimensional energy-based models

Over the last decades, our understanding of disordered systems, in which some quenched variables J define the energy landscape of thermal variables σ, has considerably improved. Such progress was made possible by the use of dedicated statistical physics approaches, in particular the replica method, which describes the properties of n → 0 copies of the thermal variables σ in the same quenched landscape J. Energy-based models also appear in the field of unsupervised machine learning, where the thermal variables are the trainable parameters J while the quenched variables are the training data σ_1, ..., σ_M. Boltzmann machines and their extensions offer a simple, yet powerful framework to learn arbitrarily complex data distributions, and generate new data with similar features. I will present a duality which allows one to transfer the extensive knowledge about disordered systems accumulated so far, to the analysis of the training of energy-based models, for which much less is known. I will illustrate this approach on spherical Boltzmann machines, use it to derive the learning phase diagram, and confirm its validity with random matrix theory.

Louise Budzynski (DIENS)

Bayesian inference with structured signals: from planted spin glasses to epidemic models under Nishimori conditions

Inference problems on random graphs can be studied through the statistical physics of disordered systems, revealing sharp phase transitions between feasible and impossible inference regimes. A largely unexplored setting of Bayesian inference is that of structured signals. In this talk, I will focus on the planted spin glass model with correlated disorder, where the signal possesses non-trivial structure. Using the cavity method and numerical inference via Belief Propagation, we uncover the resulting phase diagram and characterize the nature of the transition. I will then discuss how similar mechanisms emerge in epidemic inference models, where evidence of replica symmetry breaking can even appear under the Nishimori conditions.

Samantha Fournier (IPhT Saclay)

Learning with high dimensional chaotic systems

Chaotic dynamics can naturally arise in high-dimensional heterogeneous systems of interacting variables, of which a simple example are random recurrent neural networks. I will address two learning settings. The first corresponds to learning a periodic signal via the FORCE algorithm. This task is in particular relevant for motor control in neuroscience. I will present the Dynamical Mean-Field Theory (DMFT) analysis of FORCE training and discuss a number of open questions regarding the space of learnable functions and the stability of the periodic attractor the dynamics lands on after training. In the second setting, I will discuss how high-dimensional systems with endogenous chaotic activity can perform a generative task, where the goal is to infer the underlying statistics of the data and sample from it. The training algorithm uses contrastive Hebbian learning (also known as contrastive divergence) where the negative unlearning term is obtained directly from the out-of-equilibrium dynamics of the neural network. This setting can also be studied through DMFT which in turns allows us to investigate the following questions: Is the contrastive learning algorithm efficient in the thermodynamic limit ? In which region of phase space can the trained system recover the statistics of the data ?

This is based on: Fournier, Urbani, Statistical physics of learning in high-dimensional chaotic systems, JSTAT 2023 Fournier, Urbani, Generative modelling through internal high-dimensional chaotic activity,

PRE 2025 Fournier, Urbani, to appear, 2025